High-Level Overview of the Pokémon Card Recognition App Development

Project Goal: The primary goal of this project was to create a web application that allows users to search, upload, and identify Pokémon cards using their camera. The application was designed to provide details about the cards, manage a personal collection, and leverage machine learning for image recognition.

Initial App Creation

- Frontend Development:

- Technologies Used: React.js, HTML, CSS

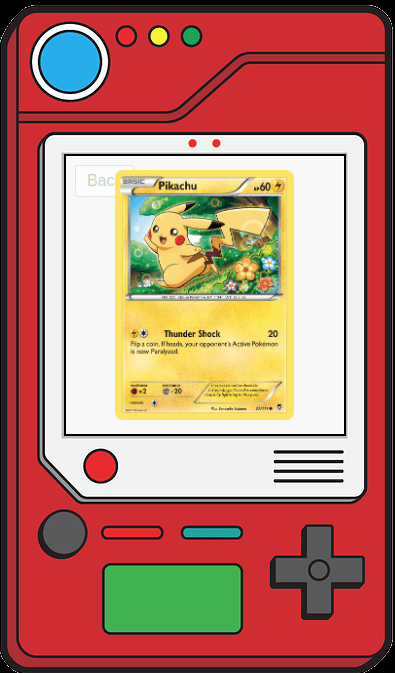

- User Interface: The UI was designed to mimic the look of a Pokédex, creating an engaging and familiar interface for users.

- Core Components:

- Search Functionality: Users can search for Pokémon cards by name.

- Card Collection: Users can view and manage their card collection.

- Camera Integration: Users can upload images of cards directly from their camera to identify and add to their collection.

- Backend Development:

- Technologies Used: Flask, SQLite, OpenCV, NumPy

- APIs Developed:

- Search Cards API: Allows users to search for cards by name, retrieving data from the local SQLite database.

- Upload and Identify API: Allows users to upload an image to identify a card using image processing techniques.

- Database Integration: An SQLite database was used to store Pokémon card information, which was fetched from the Pokémon TCG API and stored locally for quick access.

- App Files:

- Frontend (App.js): Manages the state and interactions of the application, handling user input and displaying results.

- Styling (App.css): Defines the visual style of the application, ensuring the UI resembles a Pokédex.

- Backend (App.py): Handles API requests, processes images using OpenCV, and queries the SQLite database for card information.

Machine Learning Integration

- Data Collection:

- Data Source: Images and metadata of approximately 17,000 Pokémon cards were sourced from the Pokémon TCG API available on GitHub.

- Quality and Quantity: The dataset included high-quality images of each card.

- Data Augmentation:

- Techniques Used:

- Image transformations such as rotations, flips, and color adjustments.

- Augmentation helped in creating a more robust dataset, simulating various real-world scenarios.

- Techniques Used:

- Model Training:

- Machine Learning Model: A convolutional neural network (CNN) was used for image recognition.

- Training Process: The model was trained using the augmented dataset, with adjustments to epochs, batch size, and other hyperparameters to improve accuracy.

- Model Evaluation:

- Initial Results: Initial accuracy was low, prompting the need for further fine-tuning.

- Fine-Tuning: Additional epochs, batch size adjustments, and hyperparameter tuning were conducted to improve performance.

- Deployment:

- Model Integration: The trained model was integrated into the backend API to handle image uploads and return card details.

- API Endpoint: The API endpoint for processing image uploads was enhanced to return predictions based on the trained model.

Next Steps:

- Detailed Posts: The next series of blog posts will dive deeper into each section, providing insights and a closer look at the code and technologies used.

- Future Enhancements: Plans include further refining the machine learning model, adding more features to the app, and optimizing performance.

This outline provides a high-level overview of our journey in developing the Pokémon Card Recognition App. Stay tuned for detailed posts that will walk through each step, providing insights and a closer look at the technologies used. And remember, all of this was created by ChatGPT…even this post. 🙂